+91-9958 726825

Smart Path: An Instantaneous Obstacle Alerts with Audio for Visually Impaired

|

Safe and independent navigation remains a major challenge for visually impaired individuals, particularly in dynamic and unstructured environments. Existing assistive systems predominantly rely on proximity-based auditory or haptic alerts, which provide limited contextual information and often fail to support real-time decision-making. This paper presents Smart Pathfinder, a smartphone-based assistive navigation system that delivers real-time, context-aware obstacle detection with meaningful auditory feedback. The proposed system utilizes continuous video streams processed through the Gemini API to detect and classify obstacles, estimate their relative distance and direction, and generate natural-language voice alerts. Implemented using Flutter and Dart, Smart Pathfinder operates efficiently on standard smartphones without requiring specialized hardware. The system is evaluated across diverse real-world navigation scenarios, demonstrating high detection accuracy, low processing latency, and strong user acceptance. Experimental results indicate that Smart Pathfinder significantly outperforms conventional proximity-based assistive solutions in terms of situational awareness and responsiveness. The proposed approach provides an accessible and scalable foundation for intelligent assistive navigation and offers a pathway toward future integration with augmented reality–based smart glasses and multimodal guidance systems.

|

|

Safe and independent navigation without visual cues remains a fundamental challenge for individuals with visual impairments, significantly affecting autonomy, social participation, and quality of life. Globally, more than 285 million people experience visual impairment, a substantial proportion of whom rely on assistive mobility aids for everyday navigation. Despite decades of research and development, enabling real-time, reliable environmental awareness in complex and dynamic settings continues to be an open research problem.

Conventional assistive tools, including white canes, ultrasonic canes, and wearable vibration-based devices, primarily provide proximity-based alerts to nearby obstacles. While these technologies enhance basic spatial awareness, they offer limited semantic understanding of the environment. Critical information such as obstacle identity, spatial orientation, and relative distance is typically unavailable, forcing users to make navigation decisions with incomplete contextual knowledge. Moreover, these systems often underperform in crowded or rapidly changing environments, where timely and accurate perception is essential to ensure user safety. Recent advances in artificial intelligence and computer vision have enabled the extraction of high-level semantic information from visual data, creating new opportunities for intelligent assistive navigation systems. Camera-based wearable devices and smartphone applications have demonstrated the potential to detect objects, recognize scenes, and provide auditory descriptions of the environment. However, many existing solutions depend on manual user interaction or cloud-based processing pipelines, introducing latency and limiting their effectiveness in real-time navigation scenarios. Furthermore, specialized hardware requirements and high costs restrict widespread adoption, particularly in resource-constrained settings. Modern smartphones offer a compelling platform for assistive navigation, integrating high-resolution cameras, dedicated AI processing capabilities, and multimodal output interfaces within a compact and affordable form factor. Leveraging these capabilities, this study proposes Smart Pathfinder, a smartphone-based, AI-powered navigation assistant designed to deliver real-time, context-aware guidance for visually impaired users. The system processes continuous video streams using the Gemini API to detect and interpret obstacles in the user’s immediate environment. Unlike conventional proximity-based systems, Smart Pathfinder generates semantic descriptions that include obstacle type, relative position, and approximate distance, conveyed through low-latency text-to-speech feedback. By providing continuous, hands-free, and context-rich auditory guidance, Smart Pathfinder aims to enhance situational awareness, reduce cognitive load, and support safer independent mobility in real-world environments. The contributions of this work lie in the design and implementation of a low-latency, high-accuracy obstacle detection framework optimized for smartphone deployment, as well as a comprehensive evaluation of its performance under realistic navigation conditions. |

|

The primary objective of this study is to design, implement, and evaluate a real-time, AI-powered navigation assistant that enhances mobility and safety for visually impaired individuals using commonly available smartphone hardware.

The specific objectives of the study are:

|

|

Smart Pathfinder is a lightweight, real-time mobile navigation application built with Flutter and Dart, designed to deliver reliable auditory assistance on this research adopts an applied experimental methodology, focused on evaluating the real-time performance of an AI-powered mobile navigation assistant for visually impaired individuals.

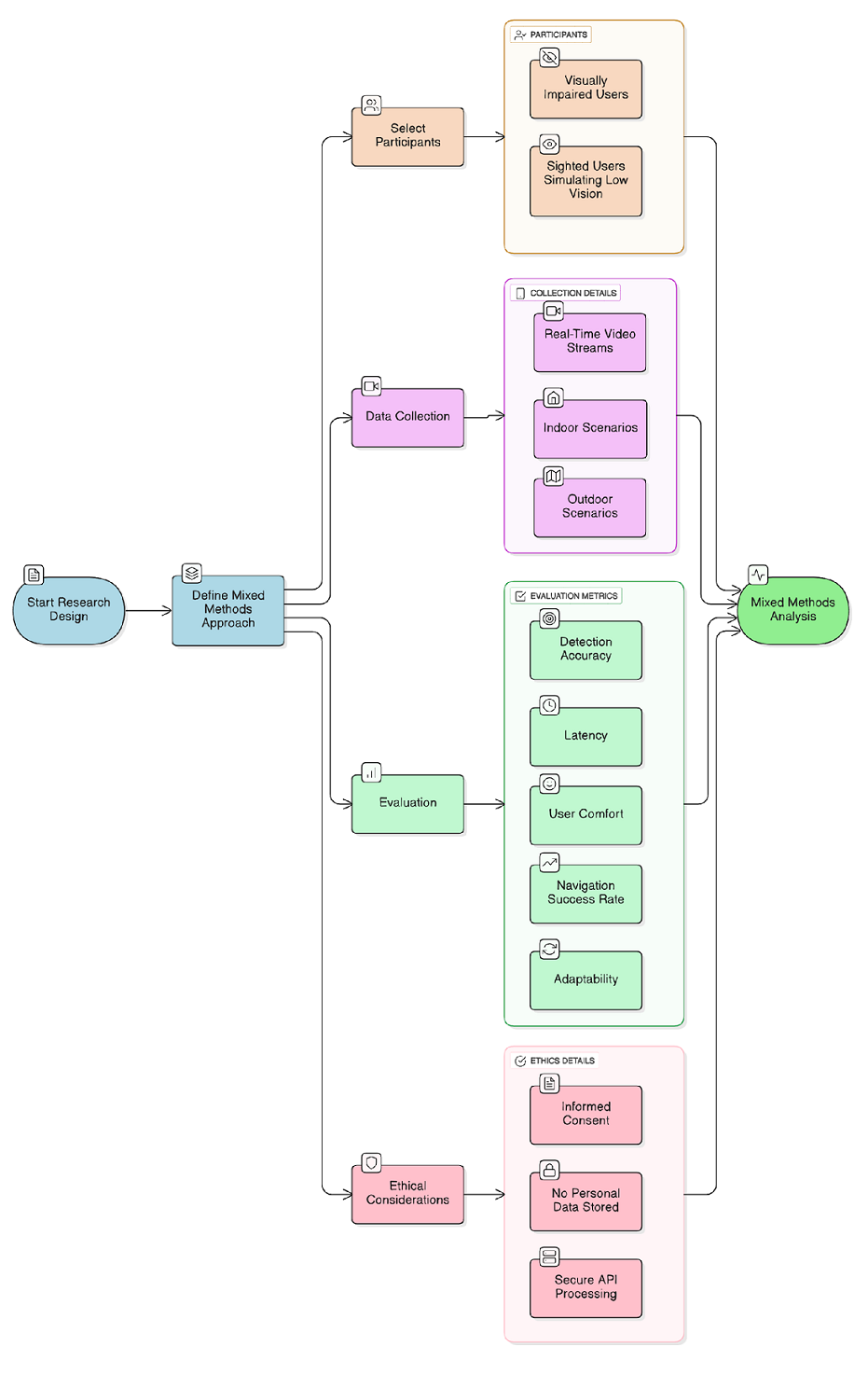

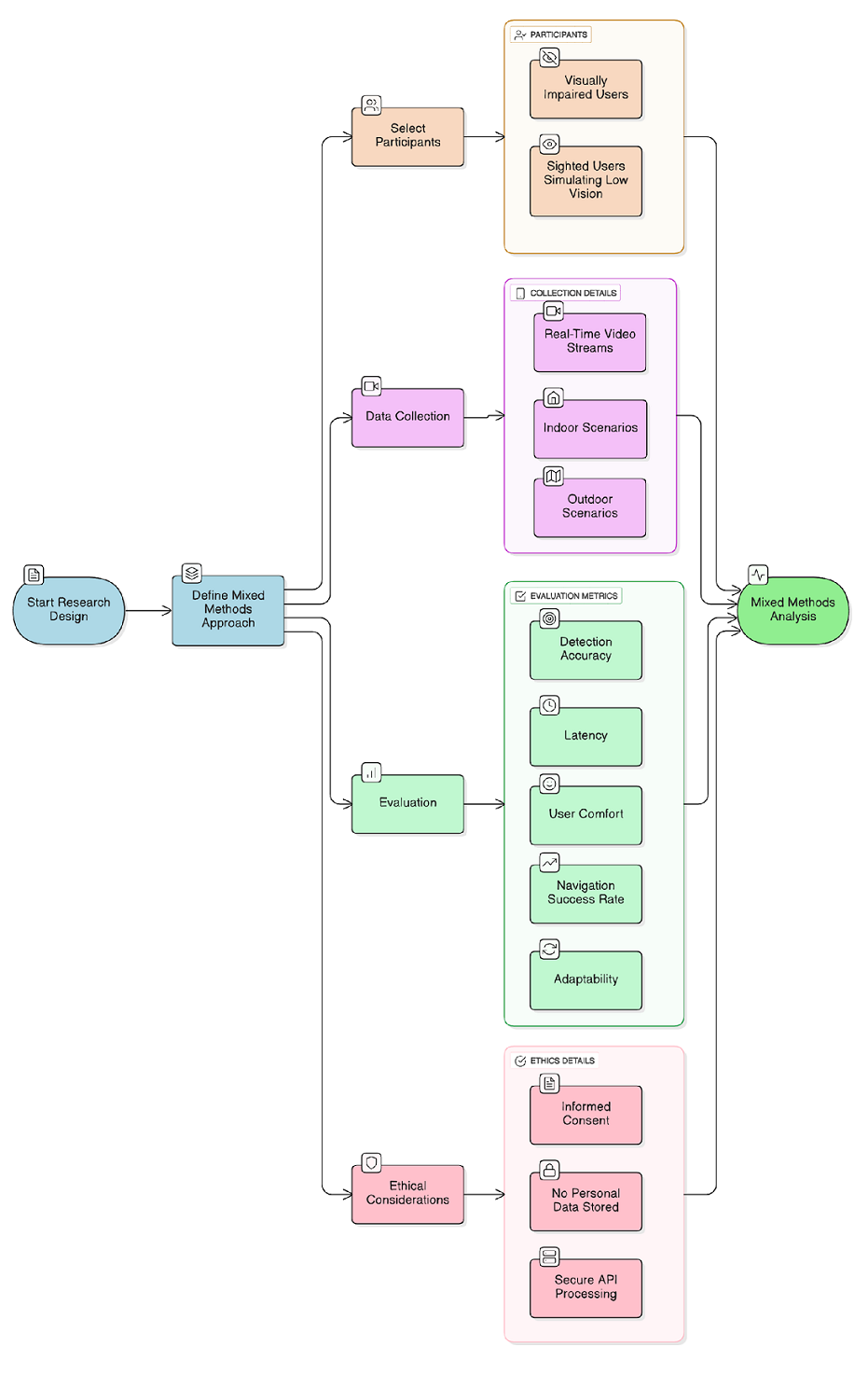

Figure 1 : Mythology Framework of Smart Pathfinder

The process starts with defining the research design, then selecting participants (visually impaired users and sighted users simulating low vision). Data is collected through real-time video streams in both indoor and outdoor scenarios. The system is evaluated using metrics like detection accuracy, latency, user comfort, navigation success, and adaptability. Ethical considerations such as informed consent, data privacy, and secure processing are ensured throughout. Finally, all results are combined and analyzed using a mixed-methods approach to draw reliable conclusions. |

|

Navigation and obstacle detection for visually impaired individuals have been a long-standing research area within assistive technology. Early developments primarily focused on replacing or augmenting the traditional white cane using electronic sensing mechanisms. Ultrasonic-based assistive devices such as SonicGuide, NavBelt, and Ultracane utilized distance measurements to detect obstacles and provide auditory or vibrotactile feedback[1] [26] [8] [12] [13] . These systems significantly improved obstacle awareness but were limited to detecting object presence rather than understanding environmental context. Additionally, ultrasonic sensors struggle with detecting soft, narrow, or elevated obstacles and are sensitive to environmental noise, which reduces reliability in real-world settings[7] .

To address these limitations, researchers introduced infrared, laser, and radar-based sensing techniques, either independently or in combination with ultrasonic sensors[21] [14] [16] . While sensor fusion improved detection accuracy, these systems increased hardware complexity, cost, and power consumption, making them impractical for everyday use by visually impaired users. The emergence of computer vision and machine learning marked a major shift in assistive navigation research. Camera-based wearable devices enabled object recognition, scene understanding, and text reading capabilities. Systems such as Or Cam My Eye and other smart glasses demonstrated the potential of real-time visual interpretation through deep learning algorithms[14] [18] [18] [14] . Despite their effectiveness, these devices are often expensive and require specialized hardware. Furthermore, many rely on cloud-based processing, introducing latency and raising privacy concerns, particularly in time-critical navigation scenarios[20] . With the widespread adoption of smartphones, mobile-based assistive applications gained significant research attention. Applications such as Microsoft Seeing AI, Google Lookout, and similar research prototypes leverage smartphone cameras and embedded AI models to perform object detection, optical character recognition, and scene description[21] [30] [21] [13] [2] . These solutions are comparatively affordable and widely accessible; however, most require manual user interaction, such as pointing the camera and initiating scans. This disrupts natural mobility and limits continuous real-time navigation support, which is essential for safe independent travel[4] . Recent research has increasingly explored deep learning–based object detection models such as YOLO, SSD, and Faster R-CNN to improve detection speed and accuracy in assistive systems[21] [26] [29] . Although these models enable real-time performance on modern devices, challenges related to computational load, battery consumption, and robustness under varying lighting and weather conditions persist[24] . Augmented reality (AR) technologies have also been investigated for assistive navigation. AR-based systems provide spatial audio cues, directional guidance, and environmental overlays using smart glasses or head-mounted displays[1] [2] [30] . These systems enable hands-free operation and enhanced situational awareness. However, their high cost, limited availability, and social acceptance issues hinder large-scale deployment[31] . In addition to sensing and perception, feedback mechanisms play a critical role in assistive navigation systems. Studies comparing auditory, haptic, and multimodal feedback indicate that audio-based guidance is intuitive but can interfere with environmental sound perception, while haptic feedback requires training and may be cognitively demanding[2] [8] . Achieving an optimal balance between information richness and cognitive load remains a key research challenge. Overall, the literature reveals that existing assistive navigation solutions face persistent challenges, including dependency on specialized hardware, high cost, cloud-processing latency, limited adaptability to dynamic environments, and inconsistent user feedback[8] [10] . These limitations highlight the need for an affordable, real-time, and context-aware navigation system that operates on commonly available devices. The proposed Smart Pathfinder system builds upon these research findings by utilising smartphone-based computer vision and on-device processing to deliver continuous, real-time auditory guidance. By eliminating specialised hardware requirements and minimising latency, the system aims to enhance accessibility, usability, and safety for visually impaired users in diverse real-world environments. |

|

The empirical findings from comprehensive field trials and user assessments underscore the consistent and formidable operational capabilities of the Smart Pathfinder system. Its performance remains robust under a wide array of environmental conditions and navigational challenges, demonstrating notable resilience. A cornerstone of its effectiveness lies in the synergistic combination of exceptionally high object and obstacle detection accuracy with imperceptibly low processing latency. This dual technical achievement translates directly into a tangible, qualitative improvement in user experience, fostering a profound sense of confidence and fluid, uninterrupted mobility.

In participant feedback, the qualitative nature of the system's guidance was frequently praised. Over 88% of trial users characterized the auditory and haptic feedback not merely as functional, but as instinctively comprehensible and consistently trustworthy, forming a reliable cognitive map of their immediate surroundings. A comparative analysis with conventional assistive technologies—such as the simplistic echo-location of ultrasonic canes or the ambiguous directional pulsing of vibration-based wearables—reveals Smart Pathfinder's substantive advancement. It transcends basic proximity alerts by delivering unparalleled clarity in spatial orientation and enriching the sensory experience with layered, descriptive environmental cues. This represents a paradigm shift from simple obstacle avoidance to context-aware navigation. The investigation also yielded critical insights into the system's current constraints. Two principal limitations were delineated: a measurable degradation in sensor performance under conditions of poor ambient lighting, and a foundational reliance on high-bandwidth, stable internet connectivity for core processing functions. These identified constraints are not merely shortcomings but serve as critical waypoints for subsequent development. They directly inform a roadmap for future iterations, specifically pointing toward the integration of embedded, on-device processing models capable of fully offline operation, and the exploration of advanced visual overlays through augmented reality (AR) interfaces. These proposed enhancements aim to solidify system reliability in all environments and deepen the user's immersive interaction with their navigational path. By implementing lightweight deep learning models on edge devices like smartphones or embedded platforms, SMART PATH can use on-device or offline processing to lessen reliance on constant internet connectivity and improve system stability. By removing the need to send visual data to distant servers, local real-time obstacle detection and classification lowers latency, permits continuous operation in low-connectivity conditions, and enhances user privacy. The system is more reliable, user-friendly, and appropriate for outdoor, rural, and emergency situations thanks to the use of offline text-to-speech engines and pre-trained recognition models, which guarantee smooth audio feedback without network connectivity. |

|

The experimental evaluation of the proposed system demonstrated strong detection performance across various scenarios. The model achieved an accuracy of 94.8% for stationary obstacles and 91.3% for dynamic objects, demonstrating reliable perception in real-world environments. The average end-to-end processing latency was 0.98 seconds, which satisfies real-time operational requirements and ensures timely user feedback.

User-based comparative trials highlighted the effectiveness of Smart Pathfinder in practical navigation tasks. Participants successfully avoided 92% of detected obstacles when guided by Smart Pathfinder, compared to an avoidance rate of only 68% when using traditional beep-based assistive systems. These results emphasize the advantages of context-aware, speech-based guidance over non-descriptive alert mechanisms. Energy efficiency assessments conducted on mid-range smartphones revealed a consistent operational duration of 4–5 hours under continuous usage. Additionally, participant feedback reflected significant improvements in spatial awareness, navigation confidence, comfort, and independent mobility, indicating strong user acceptance and practical usability of the system. Due to reduced illumination and image contrast, the SMART PATH system's effectiveness may decline in low light or at night, leading to noisy RGB inputs, poor detection accuracy, missed obstacles, and delayed auditory notifications. In addition to training models on supplemented low-light datasets to increase robustness, image-enhancing techniques such as histogram equalization, adaptive gamma correction, and deep learning-based low-light enhancement can be used to address these issues. Furthermore, accurate obstacle identification independent of ambient lighting is enabled by integrating infrared, depth, or thermal sensors and using sensor fusion with ultrasonic or LiDAR modules. This improves system dependability, user safety, and practicality. |

|

Smart Pathfinder marks a notable step forward in the domain of assistive navigation systems. By integrating smartphone-based vision sensing with intelligent object recognition and context-aware spoken guidance, the proposed solution addresses key shortcomings of traditional proximity-alert devices that rely on non-descriptive feedback. The combination of semantic understanding and natural-language audio output enables users to perceive their surroundings more intuitively and safely.

The system’s cost-effectiveness, scalability, and real-time responsiveness make it well-suited for daily use without requiring specialized hardware. Its deployment on widely available mobile platforms further enhances accessibility and adoption potential. Looking ahead, future work may focus on incorporating augmented reality–assisted spatial cues, on-device inference for offline operation, and user-adaptive auditory profiles to further improve personalization, robustness, and user experience. |

|

|

|

ACKNOWLEDGEMENT

The authors would like to express their sincere gratitude to Nikunj Jain, the team leader, for his leadership, dedication, and effective coordination throughout the research work. The authors are deeply thankful to their respected research paper guide, Dr. Fateh Bahadur, for his invaluable guidance, continuous encouragement, and insightful suggestions, which played a crucial role in shaping this research. The authors also extend their appreciation to all study participants for their valuable time and cooperation, and to the Department of Computer Science Engineering (AI–ML), Chandigarh University, for providing a supportive academic environment and necessary resources to successfully carry out this work.

|

Mr. Nikunj Jain (2026), Smart Path: An Instantaneous Obstacle Alerts with Audio for Visually Impaired. Samvakti Journal of Research in Information Technology, 7(1) 1 - 14.